Charting the path to a biologically informed system of mental illnesses

Willa Goodfellow ~ Mar '20

A moonshot exploring the human brain at single-cell resolution

Andrew Neff ~ Mar '20

Written by Andrew Neff

March 2019

What Survived Psychology's Replication Crisis?

In the age of alternative facts, many lament the loss of the good ol’ days where Walter Cronkite brought a singular incontrovertible truth to the people. Where, because of this basic agreement, the good people of the USA would gather in squares and discuss the ramifications of the undisputed truths. Nowadays, of course, civil discourse is doomed - when we can’t agree about very basic stuff, we can’t engage in scholarly academic debate like we definitely for sure used to do. But disagreement over basic facts isn’t reserved for social and political issues.

The scientific method is more than just the process through which facts are discovered - It’s the foundation upon which much of modern society is built, it describes the intuitive process we use to understand most things in our lives, and it serves a social function, helping people persuade others that what they’ve discovered is truly a certifiable fact. But for the wise and skeptical scientist, sound hypotheses and explicit experimentation isn’t enough, because accidents happen, and people, even scientists, are prone to a bit of career-advancement- or personal-bias-driven-dishonesty when the opportunity presents itself (Lohn, 2012). And so practically, the scientific method isn’t complete until an experiment has been independently replicated, by another scientist, who doesn’t have a vested interest in whether or not your particular fact is true.

The trouble is that today, and maybe always, most studies are never given their due with a proper replication attempt. Somehow, the system we’ve got doesn’t incentivize scientists to validate other researchers findings. Instead, they’re finding it much more valuable to do their own fact discovering. And so, onward science progresses, building from facts that, had the original experiment been replicated, might not have been so factual after all. It means we’re being wasteful, building research programs on un-sound science, and we’re self-deceived, promoting scientific theories that aren’t as supported as they could be. And it’s giving many scientific purists the absolute fantods.

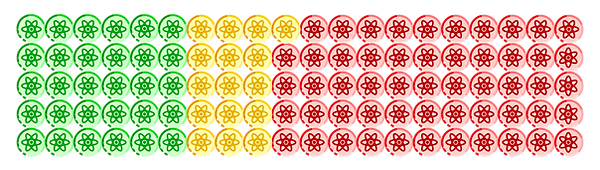

But just how concerned should we be? If, say, 95% of studies are replicated, then, well, no big deal right? But, say, only one of a hundred studies could be replicated, we might just start thinking about voting a republican into office to trash the system, might as well divert these funds to military expenditures or some other expression of racial intolerance. But what does the research say about... the research? In the field of psychology, the Open Science Collaboration took this idea very seriously, choosing 100 prominent research studies and attempting to replicate their findings in labs all over (Open Science Collaboration, 2015). And the result?

Want more Brain Science in your life?

Figured you did,

support Mind & Brain Illustrated today on Patreon.

5% of our annual proceeds are donated to the John Templeton Foundation.

The result, in brief, was a bit breathtaking - less than half of the studies tested could be replicated in other labs. A disaster, sure, as we’re now scientifically-certifiably-sure that psychology is tragically flawed. We have every right to wallow and despair that psychology and probably many other fields are producing untrustworthy results and wasting, like, at least $20 of our yearly tax bills. And we can anguish that a good bit of the people in science, like people in anything, are subject to bias driven by financial and personal incentives. And definitely, absolutely, we have to do something to reform basic scientific practices, and the communication of our results.

But from another perspective, what the good people of the Open Science Collaboration did was provide an incredibly rare dataset, that allows science to fulfill its promise of an impartial pursuit of truth. That is, we can comb through this dataset, and for those studies that proved replicable, we can now say with confidence that indeed, “Orienting attention in visual working memory reduces interference from memory probes”, or, also, some more exciting sounding stuff.

In a way, for those studies that were successfully replicated, we’re back to the days of Walter Cronkite, where we have access to a ground truth that we all (probably should) agree upon. Where we can stop doubting the basic facts, instead, we can debate what the facts mean. So over the next year or so, we’re embarking on a project to cover each of those successfully replicated studies one-by-one. In addition, we’ve created a reddit community /r/ReproduciblePsych/, and we are officially requesting that you post your thoughts, link to your writing, or point us to some reproducible psychological research that you’re interested in. And square-gathering and scholarly-debate shall ensue.

A Quick Post-Script

To those authors whose work wasn’t successfully replicated, but believe the replication attempt was faulty, sorry about the faulty replication, and sorry about our not covering your facts. Many of your concerns are undeniably valid (Gilbert, 2016), but, so it goes. The papers that we’re targeting are a sample of convenience, they’re by no means the only reproducible psychological research around. If you know of a psychological study that’s been followed up with a high-powered, pre-registered, replication-success, we want to hear about it! Please contact us with more information, or share in the subreddit.

References:

-

Gilbert, Daniel T., et al. "Comment on “Estimating the reproducibility of psychological science”." Science 351.6277 (2016): 1037-1037.

-

John, Leslie K., George Loewenstein, and Drazen Prelec. "Measuring the prevalence of questionable research practices with incentives for truth telling." Psychological science 23.5 (2012): 524-532.

-

Open Science Collaboration. "Estimating the reproducibility of psychological science." Science 349.6251 (2015): aac4716.